Improving Government Communication with AI

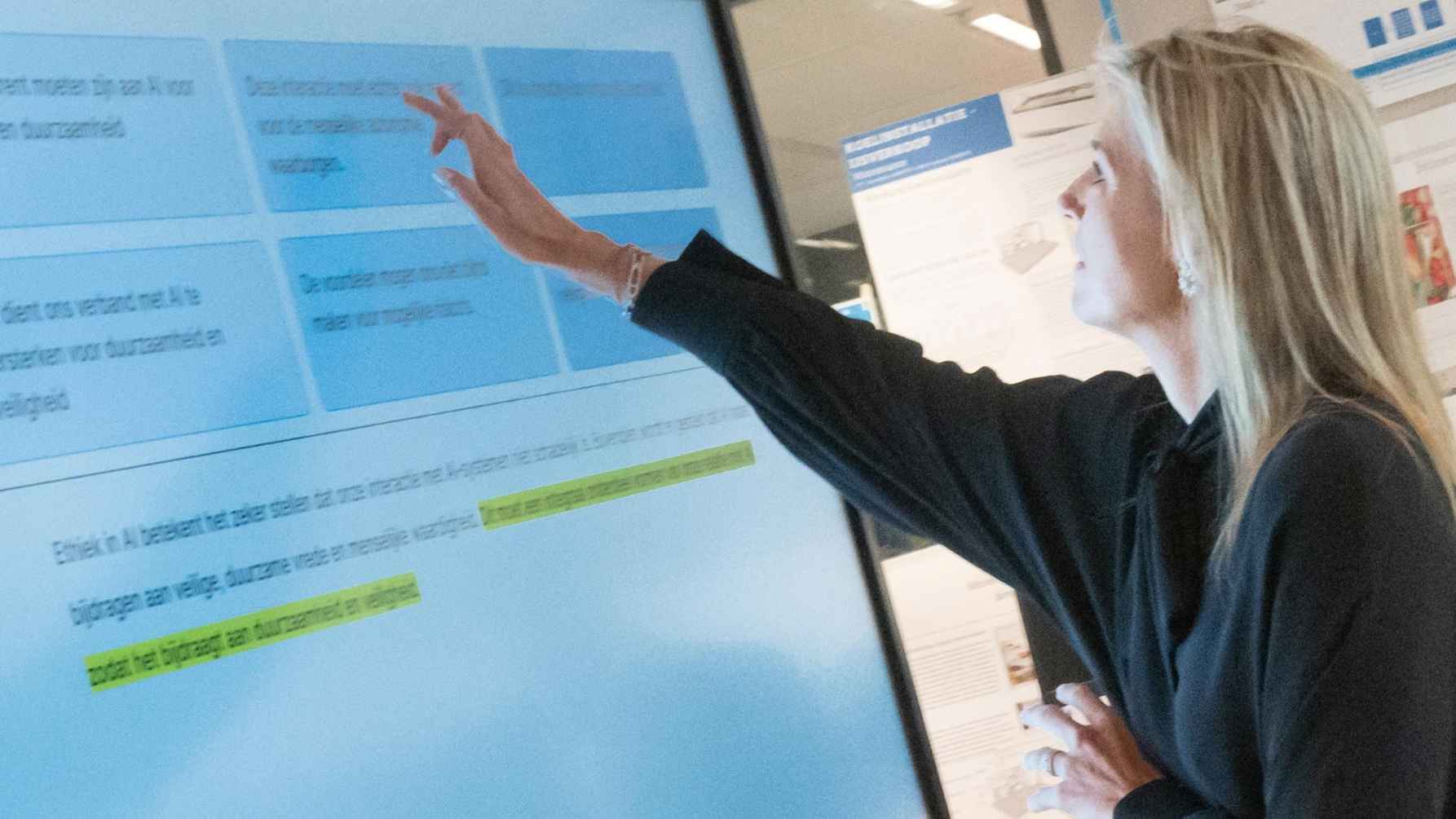

In the Duidelijke Taal project, we collaborate with various partners to explore how AI can be responsibly used to simplify government communication. This ensures it better aligns with citizens' experiences.

Technology is not neutral; it affects the agency of people (and non-humans) who interact with it, either directly or indirectly. As a result, technology always has a societal and ethical dimension. The Responsible IT research group explores how we can consider the ethical and societal implications of digital technology—particularly AI—when designing, developing, and using it.

On this page, you will find a complete overview of agenda items from the Responsible IT research group. These range from in-house organized events such as conferences, symposia, lectures, workshops, and courses to presentations by our researchers at external events related to our research.

In the Duidelijke Taal project, we collaborate with various partners to explore how AI can be responsibly used to simplify government communication. This ensures it better aligns with citizens' experiences.

In the Netherlands, 2.5 million people struggle with reading and writing. To break this cycle for the next generation, the Verhaal Speciaal project is developing an AI-powered app that enables low-literate children and parents to read together and improve their language skills.

The AI, Media & Democracy Lab brings together interdisciplinary groups to anticipate future challenges and share knowledge. The lab aims to drive innovation in AI applications that reinforce the democratic role of media.

The experts of the Responsible IT research group.

As of April 1, 2024, Pascal Wiggers is the professor of the Responsible IT research group at the Faculty of Digital Media & Creative Industries (FDMCI). Before this role, he was an associate professor of Responsible Artificial Intelligence (AI), a part of the Responsible IT research group. In this position, Wiggers focused on developing AI based on public values, considering the individual, social, and ethical consequences of AI.